I am Linping YUAN (袁林萍), currently a research assistant professor at the Department of Computer Science and Engineering (CSE), The Hong Kong University of Science and Technology (HKUST). I received my Ph.D. at HKUST VisLab at CSE of HKUST, supervised by Prof. Huamin Qu. Before that, I obtained my bachelor’s degree in Software Engineering from Xi’an Jiaotong University (XJTU) in 2019.

My research interests lie in the intersection of virtual/augmented reality (VR/AR), human-computer interaction (HCI), and data visualization (VIS). I design and develop algorithms, interactive tools, and visual analytic systems to facilitate the creation of various artifacts, including 2D visualizations and infographics, AR data videos, and VR animated stories. Specifically, my research 1) provides computational creativity support by mining design practices from large datasets with deep learning techniques, and 2) adopts data-driven approach to facilitate creators understand and improve the way audience interact with their artifacts.

Welcome to drop me an email if you are interested in my research or want to explore research collaboration :D

Latest News

| Our project, where I serve as a co-investigator, has secured 1M HKD in funding from CORE, HKUST. This project will delve into cutting-edge immersive visualization and deep learning techniques to advance the development of a state-of-the-art digital twin of the Greater Bay Area. | |

| I am serving as an Area Chair of the Computational Interaction Subcommittee for CHI 2025. Thanks for the invitation! | |

| I obtained my Ph.D. degree and joined CSE of HKUST as a research assistant professor. Big thanks to my supervisor, family, mentors, peers, and anyone who supported me! | |

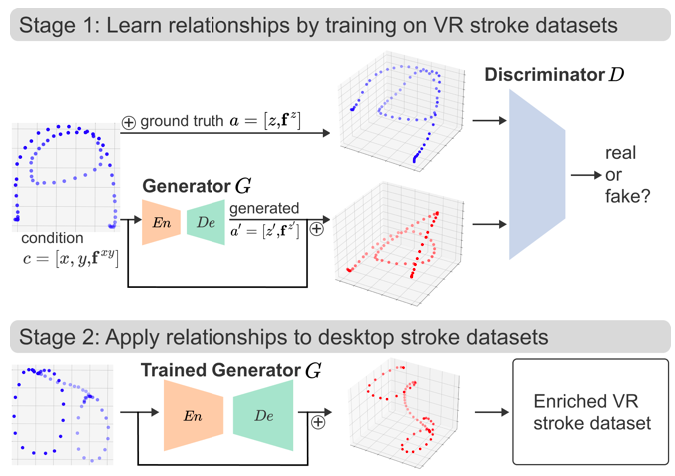

| Our paper Generating Virtual Reality Stroke Gesture Data from Out-of-Distribution Desktop Stroke Gesture Data has been accepted by IEEE VR 2024! It is an important milestone in my journey to becoming an independent researcher. | |

| I am very excited to start a new journey at Intelligent Interactive Systems Group in University of Cambridge as a visiting student, supervised by Prof. Per Ola Kristensson. | |

| The exhibition China in Maps - 500 Years of Evolving Images has been launched in HKUST! Glad to be an AR developer in this amazing project. | |

| Our research proposal Augmenting Situated Visualizations with Tangible User Interfaces was awarded over 1M HKD by the General Research Fund, the Research Grants Council (RGC) of Hong Kong. | |

| I was awarded a 2022 Style3D Graduate Fellowship. Thanks for the generous support to my research! | |

| We won the Deloitte ESG Innovation Award in HackUST 2022 (Top prize under the ESG theme). Further details can be found here. |

Featured Publications

Featured Projects

The project seeks to transform traditional RES modeling by creating an accurate, flexible, and user-friendly digital twin of the Guangdong-Hong Kong-Macao Bay Area. We are developing an immersive analytics system for seamless interaction, enabling users to extract valuable insights.

ViePIE aims to promote sustainable lifestyle with AR gamification and digital twin. We enable individuals to perceive the environmental implications of their measurable activities, immerse themselves in the climate change impacts, and empower both individuals and organizations with climate-smart choices. This project received the Deloitte ESG Innovation Award in HackUST 2022.

AR Ricci Map is an AR mobile app that blendes digital maps with a physcial map. It shows different versions of ancient maps and transforms 2D maps into 3D globes, which provides exhibition visitors a new and engaging way to understand these historical maps. The app was an important part of the exibition China In Maps 500 Years of Evolving Images.